In a previous article, I referred to my early Artificial Intelligence (AI) investigations in the ‘80s. Although the ambition was great at that time, practical results were not many. Nowadays everybody is talking about a renaissance in the AI field, especially with the public release of generative AI tools in the last few years.

I’ve been experimenting with generative AI tools in text and graphics domains and would like to share my initial findings. But first, we need some theory to understand how these work.

Large Language Models (LLMs)

Although AI work has been going on for a long time with the use of neural networks1, fast progress could only be possible after the rapid evolution of Large Language Models (LLM). LLMs try to achieve general-purpose language understanding and generation. Being autoregressive language models, they work by taking an input text and repeatedly predicting the next token or word.

Towards the end of the 20th century, specific Recurrent Neural Networks2 named Long Short-Term Memory (LSTM) networks were built to support longer sequential data such as time series, speech and text. After advances in Natural Language Processing (NLP) in the first decade of the 21st century, Google intensified their AI research under the Google Brain Team. In 2017, Google introduced the Transformer network architecture which was a deep learning architecture using the parallel multi-head attention mechanism. Google showed that their transformer architecture fared much better than the recurrent and convolutional neural networks in tasks such as language modelling, machine translation and question answering.

Between 2018 and 2023, transformers were used in pre-trained models such as BERT and GPT, resulting in the recent proliferation of models.

Word Embeddings

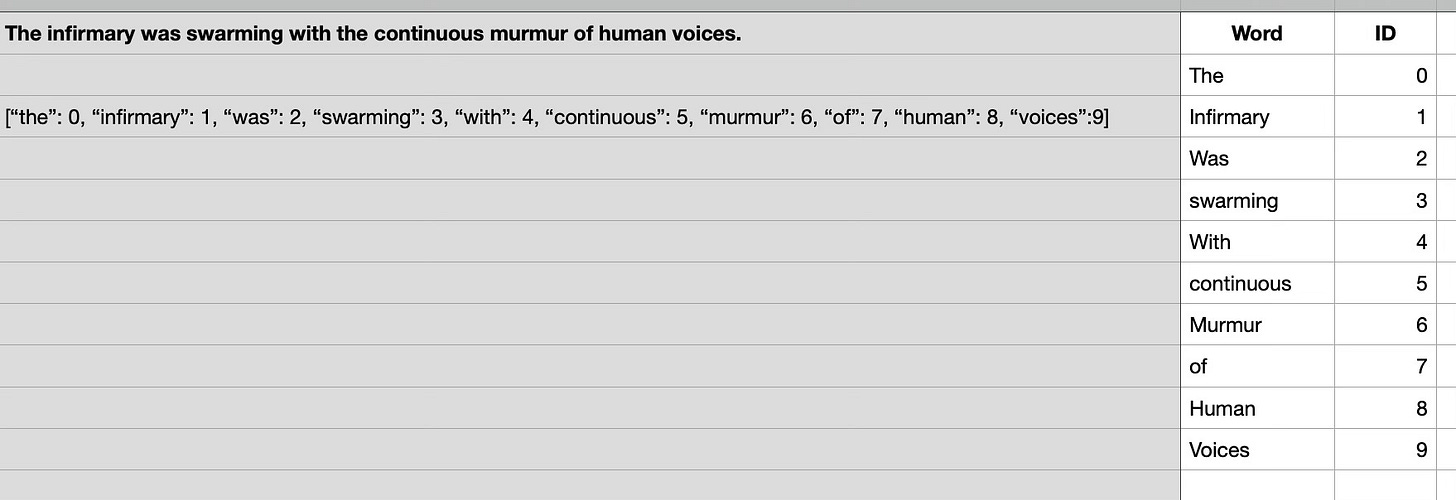

The key to generating plausible text lies in establishing a connection or affinity between different words. The first step is to associate each word with a numerical value. There are various methods for this; the simplest would be assigning a unique identifier (integer) to each word.

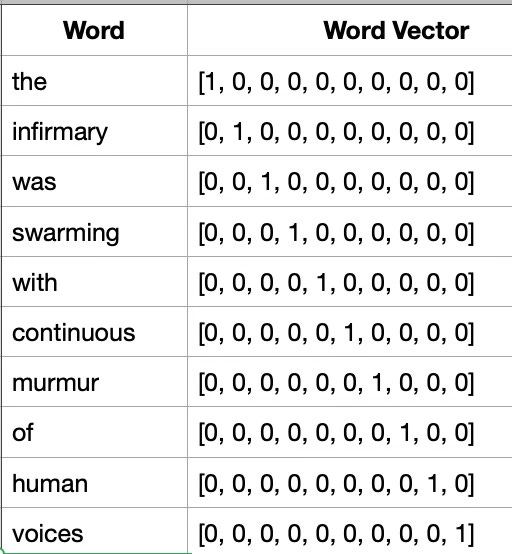

The infirmary was swarming with the continuous murmur of human voices.However, this is a pretty simple and useless embedding and there is no relation defined between the words here. To resolve this issue, the scoring system is revised, producing a vector for each word and adding information about words that appear together or close to each other in statements. This production of vectors for words can be done with single-layer neural networks with an input and an output layer, having a hidden layer in between.

Vectors are fine, but we have to have one or more vectors associated with each word, depending on what other words are close to it. We should also remember that this only makes sense if there is a huge set of training data so that there is a clear understanding of which words are typically used with what other words.

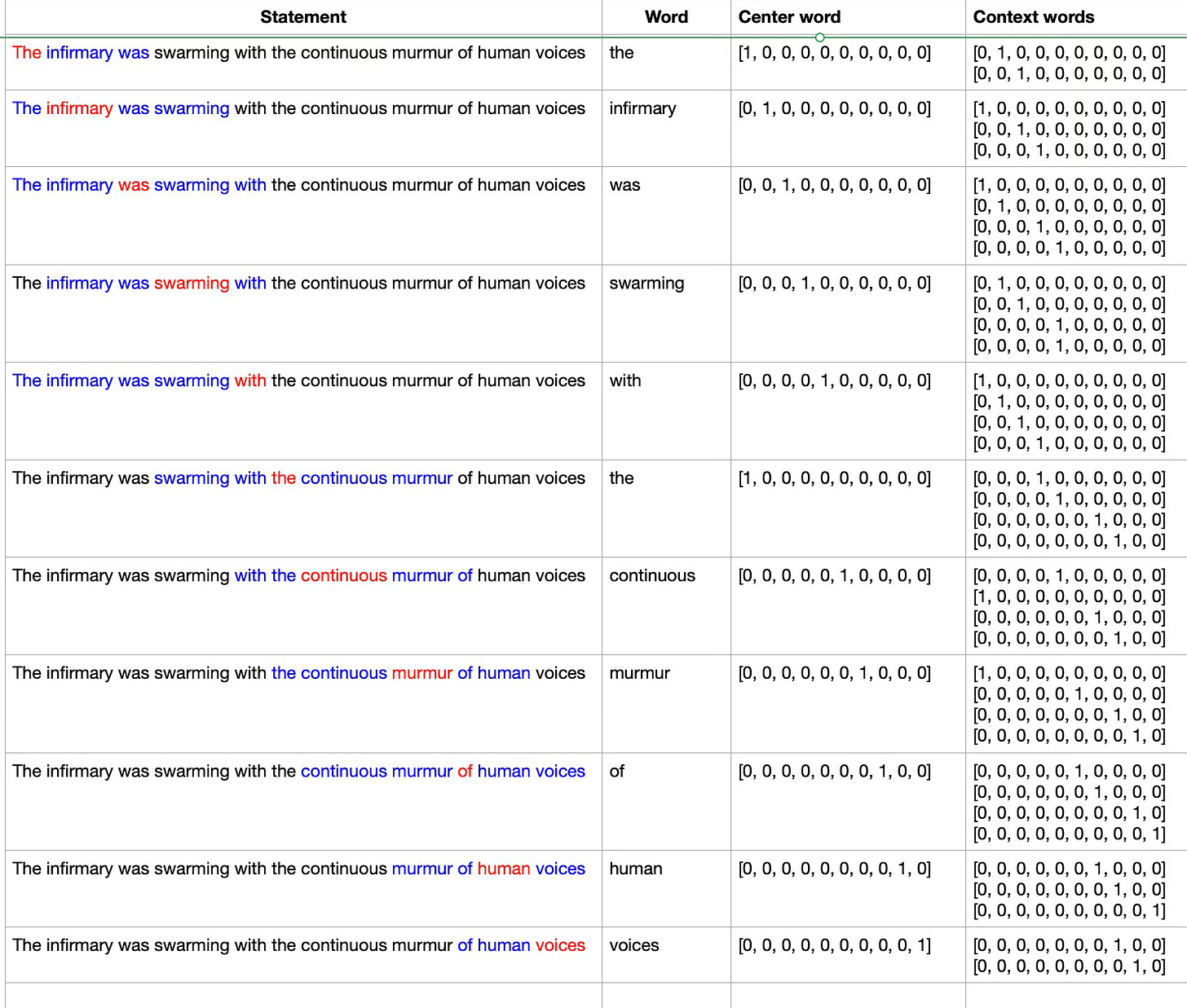

There are two different methods to calculate the vectors from the words. The Continuous Bag of Words (CBOW) method looks at the context of a word using a pre-defined window size. Using the sentence above and a window size of 5, we can get the relevant vectors as shown below. Red words are center words and blue are context words.

These will then be converted to vectors with real numbers and the next word can be generated using these numbers and weights in the neural network. The main problem in CBOW is that there is an indication of the affinity between the words but there is no opportunity to look into the order of words. It is quite intensive in terms of computations and it can end up in the multiplication of huge, potentially sparse matrices.

Another approach to calculating word vectors is the skip-gram method. Instead of predicting the next word to follow, it predicts words in a range before and after the current word. It takes only one word as an input and then predicts the closely related context words, thus being able to manage rarely found words better.

Both approaches have a final goal to estimate the weights of the nodes in the hidden layer of the neural network.

Transformer architecture

Transformer is a new machine learning architecture that is suitable for language understanding. Initially proposed by Google Brain engineers in 2017, it has become the dominant architecture in language modelling, machine translation and question-answering.

This architecture uses encoders and decoders where encoders map an input sequence to a series of continuous representations. Each encoder consists of a self-attention mechanism and a feed-forward neural network. The self-attention mechanism accepts input encodings from the previous encoder and weights their relevance to each other to generate output encodings. The encoder is bidirectional. Attention can be placed on tokens before and after the current token.

Each decoder consists of a self-attention mechanism, an attention mechanism over the encodings, and a feed-forward neural network.

The most important advantage of transformers is their ability to process the encoding phase in parallel, thus shortening the training process significantly.

Google has produced the Bidirectional Encoder Representations from Transformers (BERT) transformer model, which is encoder-only. It has been pre-trained and also fine-tuned. The encoder uses a 512-word context window. It is bi-directional. BERT has two separate models, one with 110 million parameters and a larger one with 340 million parameters.

Another popular architecture is the Generative Pre-trained Transformer (GPT) which is decoder-only and as such is easier to train and more suitable for Large Language Models. ChatGPT is the most popular implementation by OpenAI and is now in its 4th iteration with ChatGPT 4 being a multimodal generator. (I will cover text and image generation models in a future article). It is estimated that it uses a few trillion parameters. Decoder models like GPT-4 only use pre-training and as such are running unsupervised.

(to be continued…)

An artificial neural network is an interconnected group of nodes, inspired by a simplification of neurons in a brain. The output of an artificial neuron is fed to the input of another artificial neuron. Each neutron processes the signal received and can transmit a signal to other neurons. The connections between neurons (edges) and the neurons themselves have weights, which will change in the process of training. The network has multiple layers of neurons and some of these layers are hidden, effectively making the neural network a kind of black box.

A type of artificial neural network used primarily for image recognition and processing, due to its ability to recognize patterns in images.