Overview

I wrote a series about Generative AI and published the posts in October and November of 2023. At that time there were a few options for music generation through AI. I gave a summary of the situation and a few example models towards the end of this post. I will report on the current situation as needed in this post.

Music FX

Music LM is a Google experimental model that I reported back in October. Google has updated the model since then. I did not have access to the model when I wrote the original article, but I can use MusicFX (as it is called now). There are also lots of samples on the web page, with a comparison of the output of the latest model with the output of the original model described in the original paper. The original paper also mentions MusicCaps, a dataset that is going to be used for future research. Music LM treats the Audio Synthesis task as a language modelling task in discrete representation space and as such can treat this as a normal LLM query. It generates audio from an audio-only dataset (thus there are no annotations used). Since there is not enough data on audio-text pairs (which could be used to create embeddings for generation), MusicLM has used the MuLan model, which is a joint text-music model, eliminating the need for captioning at training time. Please also note that generating automatic captions for music is at present a much more difficult task as compared to captioning images (for which ample data exists).

MusicFX can generate music in 48kHz and up to 70 seconds length.

I tested the model with an excerpt from one of my short stories, one about a post-apocalyptic society and the seers who try to preserve humanity’s knowledge. I just posted a few paragraphs and did not include any suggestions for music genre or other musical properties. Here is the prompt:

Yesterday, as I walked in the valley, listening to the repercussion from the now-and-then screeching wheels of the old cars carrying the nomads to God-knows-where, I encountered one of them Seers. He was the first one I’d seen since my childhood, so I was interested in the man. A pallid face, but I couldn’t discern easily, for the ash clouds had settled over the place once again. I saw the end of the line of cars fleeing from the now-inaudible perils that have won the struggle with Man. Man had surrendered, Man had fled from the disaster area with indelible memories in his mind. The antagonism between the three blocks of the world has caused the fury of the weapons the men have created to be released on themselves. Despite the idea that a World War would last only a few hours, the doom had been delayed, the war had spread in subtle outbursts. The august weapons had shown their most horrid faces, the awe and reverence had turned into a trepidation from the wrath of science.

I have included the two alternatives generated by MusicFX below.

Soundraw

Soundraw was another tool I looked at last October, but at the time I did not have a chance to test it.

You select the genre (a lot of genres are missing, by the way), the mood and the tempo. It can generate up to 3 minutes of instrumental music.

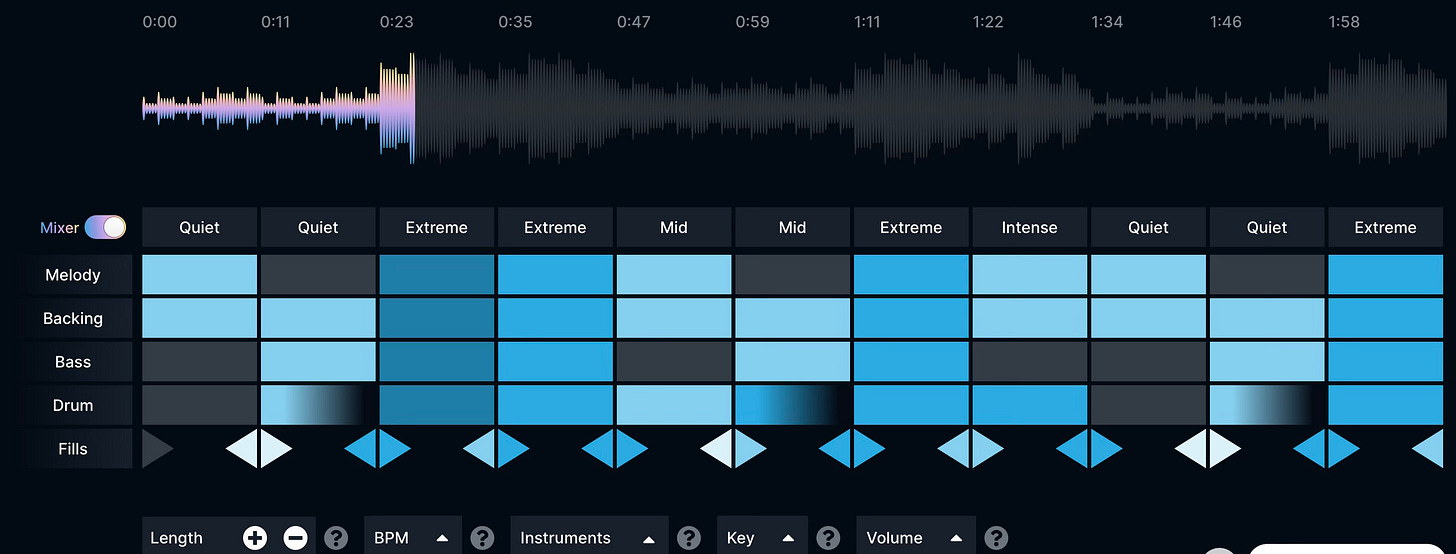

It can produce music and lets you customize the generated music with functions like shortening the intro, deciding on the loudness for a particular section etc. The editing screen looks like what I added below.

The generated music sounds okay, but with the level of control and lack of sub-genres or other defining features, it’s always going to sound average. I could not add a generated music sample, since there is no free plan to test it and as such you can not download it.

AIVA

AIVA uses deep learning to analyze and learn from a vast database of music, enabling it to extrapolate data to create original music pieces in a variety of different styles and genres. The user selects a generation profile (which could be a genre or a style of music), then the key signature and duration. It can generate up to 3 minutes of music every time. Free accounts can have 3 downloads per month and generated music is free for personal use. Monetizing music is possible with Pro accounts.

Although the parameters are simplistic, the generated output is quite promising. I've attached two sample music pieces below.

See this video which shows an orchestra playing music composed by AIVA and used for a mobile game.

Udio

Udio has released a Beta version of an AI Music generator. It is free to use in the Beta period and creates music based on styles and tags. Check the embedded music below to see two rock songs I generated with the prompts “A song about a scientist desperately in love with a girl who spurns his advances, rock” and “a song about the heat death of the universe and the feelings it raises in the mind of a young man, symphonic rock”. The novelty of this generator seems to be that it produces songs with full lyrics, although it allows you to supply the lyrics yourself. The quality of the music is probably the best I’ve seen in most generators.

It can generate a 30-second music piece but can extend it up to 5 minutes (10 sections of 30 seconds each). My son is an Audio Media Engineer and he has looked into Udio in detail in the YouTube video podcast here.

Note that the symphonic rock variant really sounds genuine, very close to the many groups which operate in this genre.

Stability AI

Stable Audio 2.0

Stability AI has released Stable Audio 2.0, which can produce music for up to 3 minutes at 44.1kHz stereo. Apart from text-to-music generation, Stable Audio can also allow audio-to-audio generation by allowing users to upload music and ask for changes through a text prompt. The model was trained on a licensed audio dataset. A free account can generate up to 30 seconds of music when uploading existing audio and up to 3 minutes when generating from a text prompt. Free accounts are given 20 music points and 2 points are needed per generation. Pro accounts have higher limits.

I generated two pieces of music. The first one is a smooth jazz track, which I found to be really bland. The second is is an upbeat progressive metal track, which I also found to be quite unoriginal and not very creative. My impression about Stable Audio 2.0 is not great, given alternatives like Udio.

Wrapup

Music generation models have advanced greatly in the six months since I first looked into them. There is growing concern in the music community about how this progression would affect musician’s lives, starting with composition and extending into all functions of music. This is to be seen.

Sure Karen, no issues. I will also check your article, since I don’t see much on ethics for AI, let alone in the area of music generation.

Hi, I found you via Michael Spencer's note for new Substacks :) This article fits well with my series in progress on ethics of generative AI for music. Do you mind if I link to this post in my upcoming "PART 3" article?