In my previous posts, I implemented chat and image generation. However, I used fixed parameters, thus it is not very convenient to use different parameters for chats and image generation.

I will implement UserSettings, which will store OpenAI parameters and use them in chats and image generation.

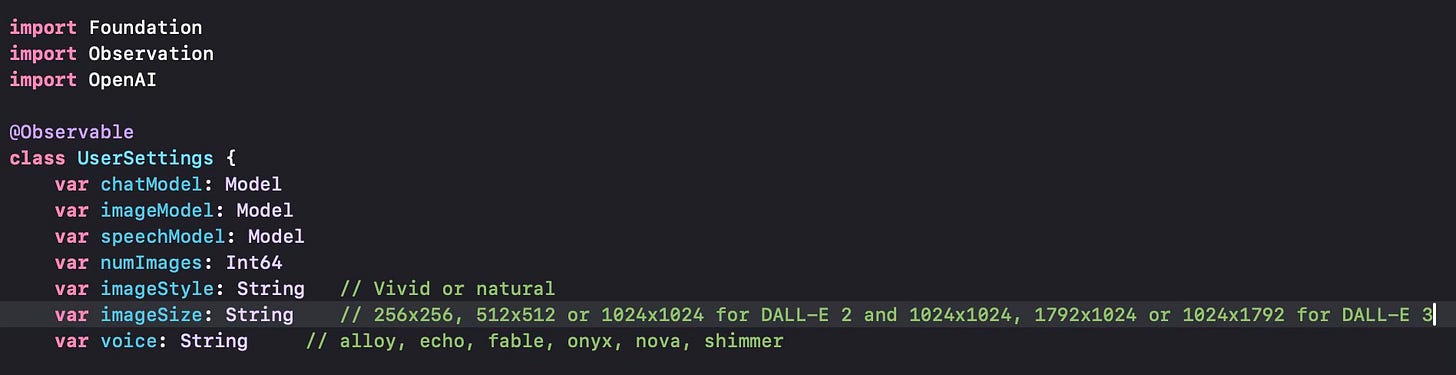

The first thing to do will be to define a class to store user settings. This will be an observable class so that we can trigger automatic UI updates when any of its properties change.

The first three settings correspond to the OpenAI models to be used for Chat, Image Generation and Speech Generation. OpenAI uses string variables for model names. The numImages parameter defines how many images should be created in a single prompt. The imageStyle parameter defines the style to be used in image generation, which can currently be either vivid or natural. The imageSize parameter shows the resolution of the images to be generated. The voice parameter shows the name of the voice to use in speech generation. There will be other parameters for other action types we will possibly cover in future posts.

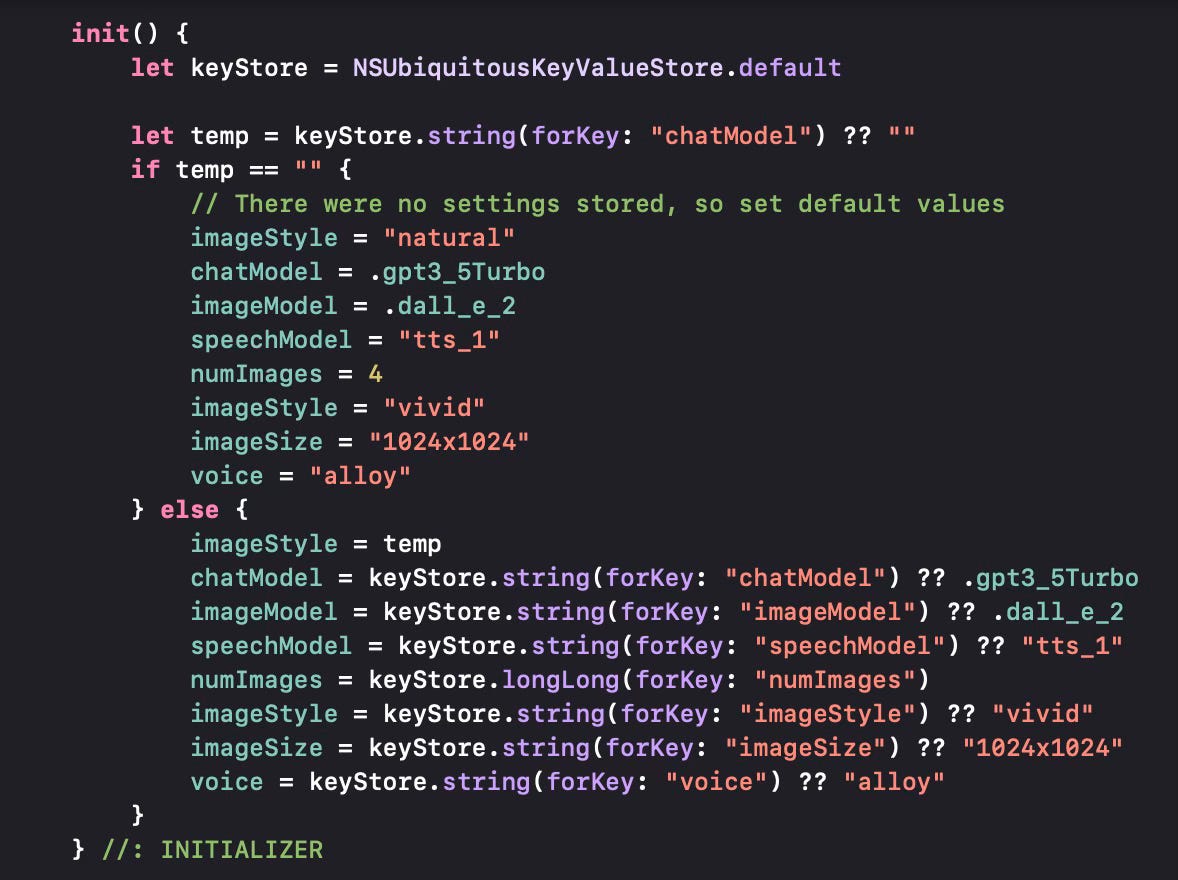

We would like to store the user settings so that they survive between launches of the program and that they can be used across different devices. NSUbiquitousKeyValueStore is the key store to use for this purpose. We first check whether a setting for the chat model exists. If not, this means we are running the App for the first time, so we set defaults for each of these parameters. If this setting exists, then we read the setting and all the other settings from the key store and populate the properties of the class.

The userSettings object can be saved to the iCloud key-value store, thus enabling the sharing of the properties on iCloud.

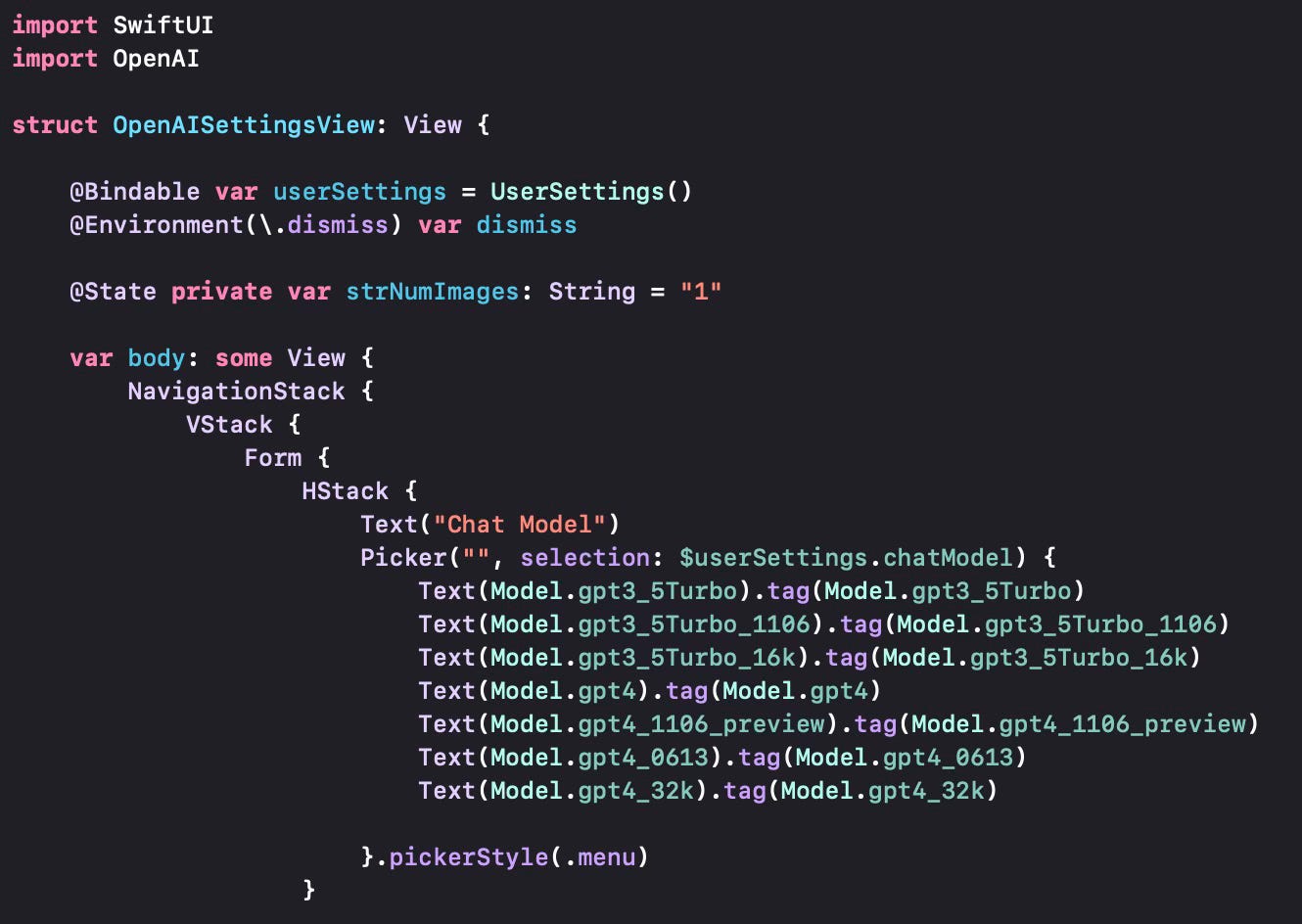

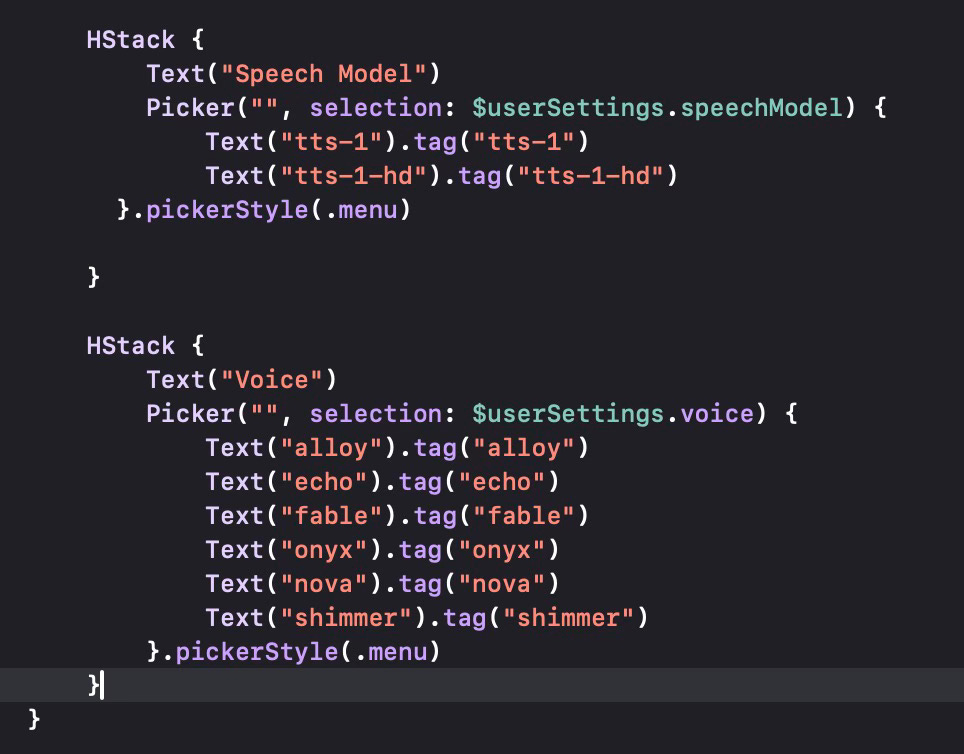

This is all good to set defaults and save the settings, but we should now allow the user to edit these settings. The view OpenAISettingsView does exactly that. We create a copy of the user settings (which reads the latest saved values from the iCloud Key-Value store. All parameters will be in a form. Chat model selection is done through a Picker which has all the relevant values of OpenAI models that can be used for chatting.

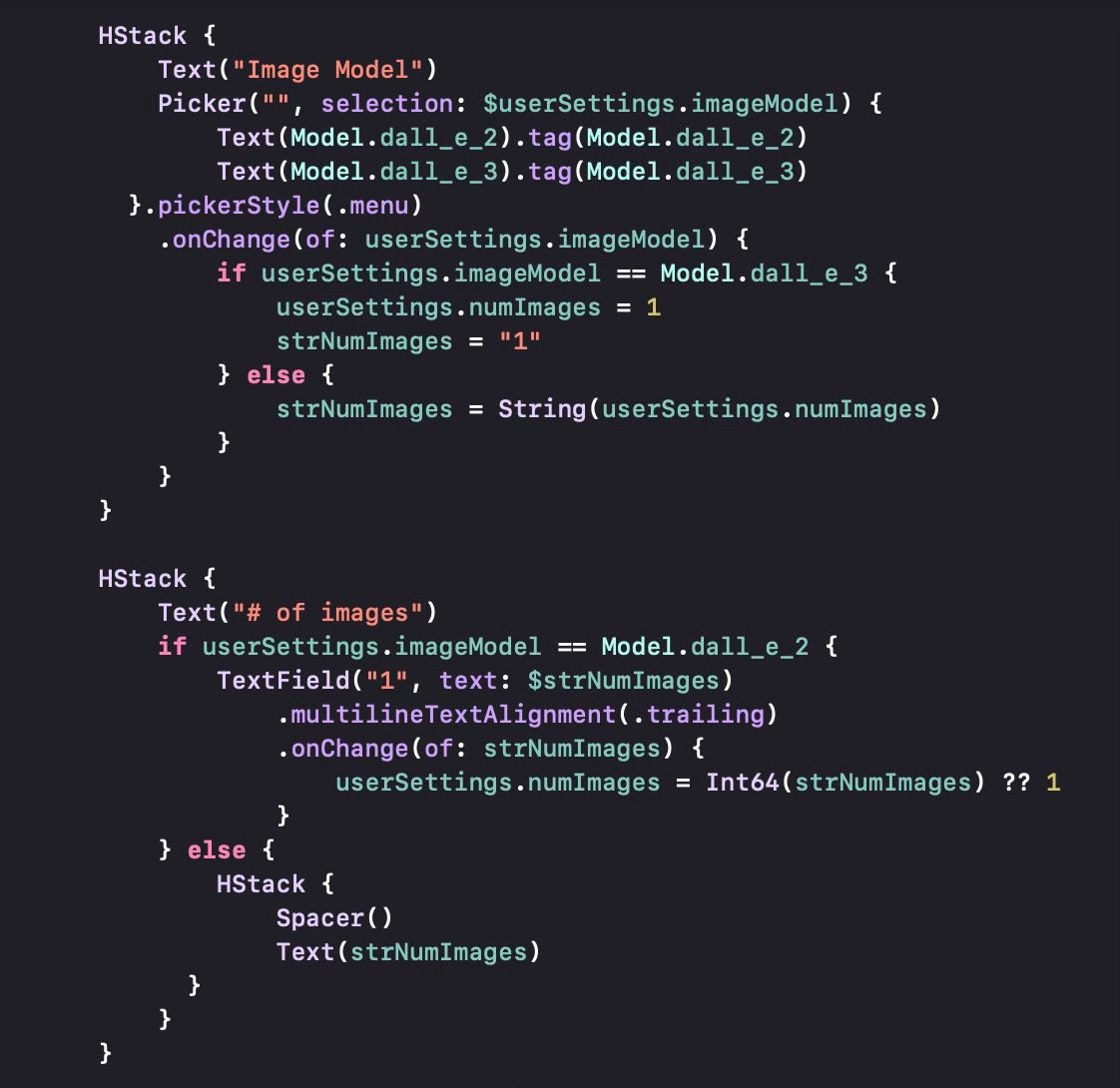

The next set of parameters is about image models and number of images. The allowed image generation models are DALL-E 2 and DALL-E 3. We also make sure that the number of generated images is set to 1 for DALL-E 3 and can be set to a higher number for DALL-E 2.

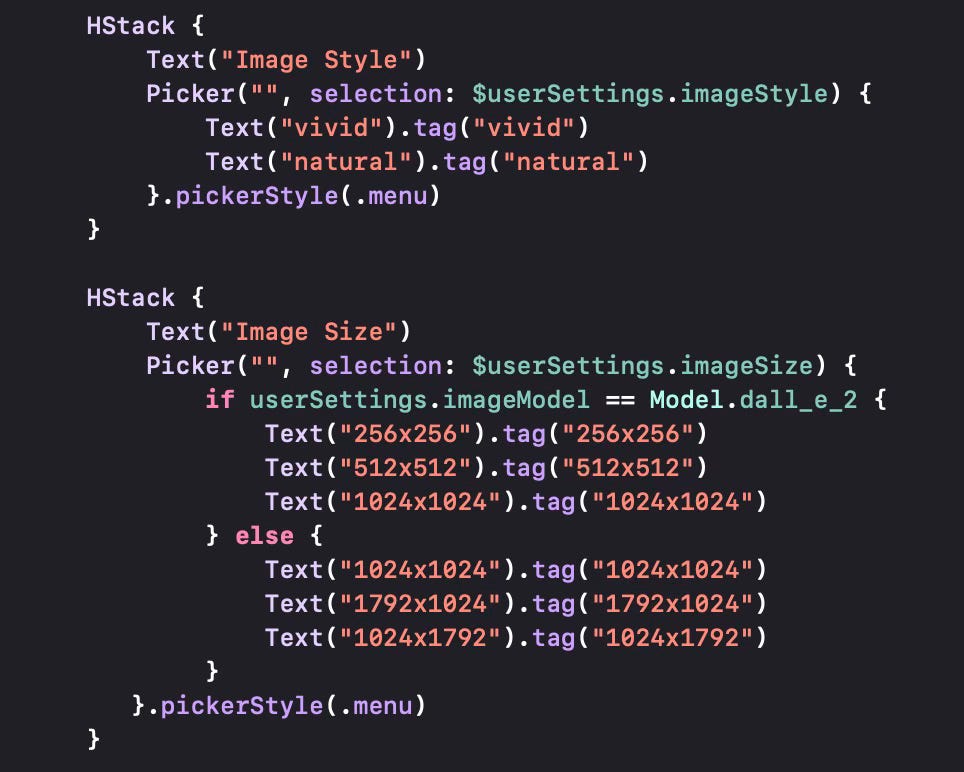

The next setting is about the image style, which we get from a picker with “vivid” and “natural” as choices. The image size can have two sets of values depending on which image model we use.

The last two settings are about the speech generation model and the voice to use for speech generation.

When we use this code, we can see all the settings in a form and save revised values to the iCloud Key-Value store.

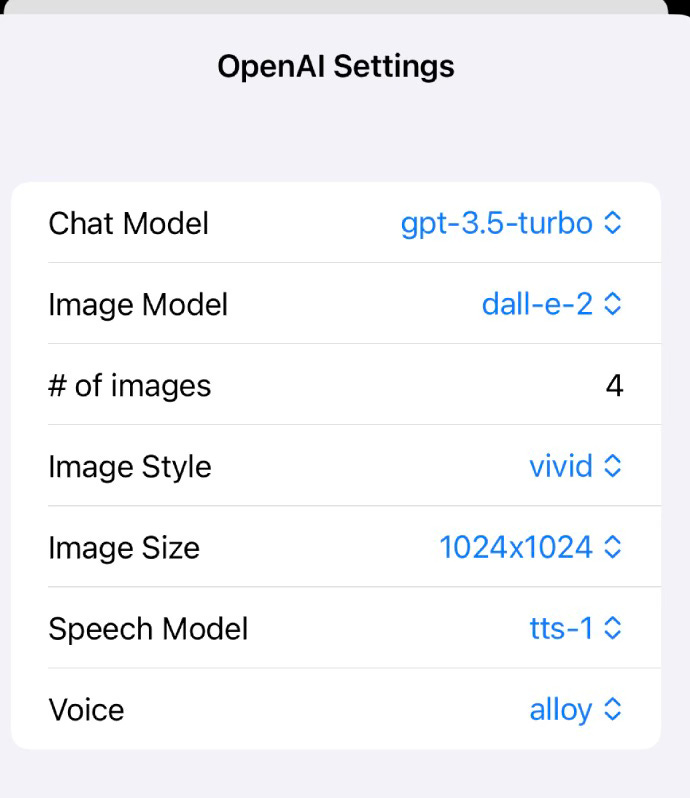

Since we can now use userSettings to store all settings, we should use it in our generation process. We revise ExecuteChatView to reflect this.

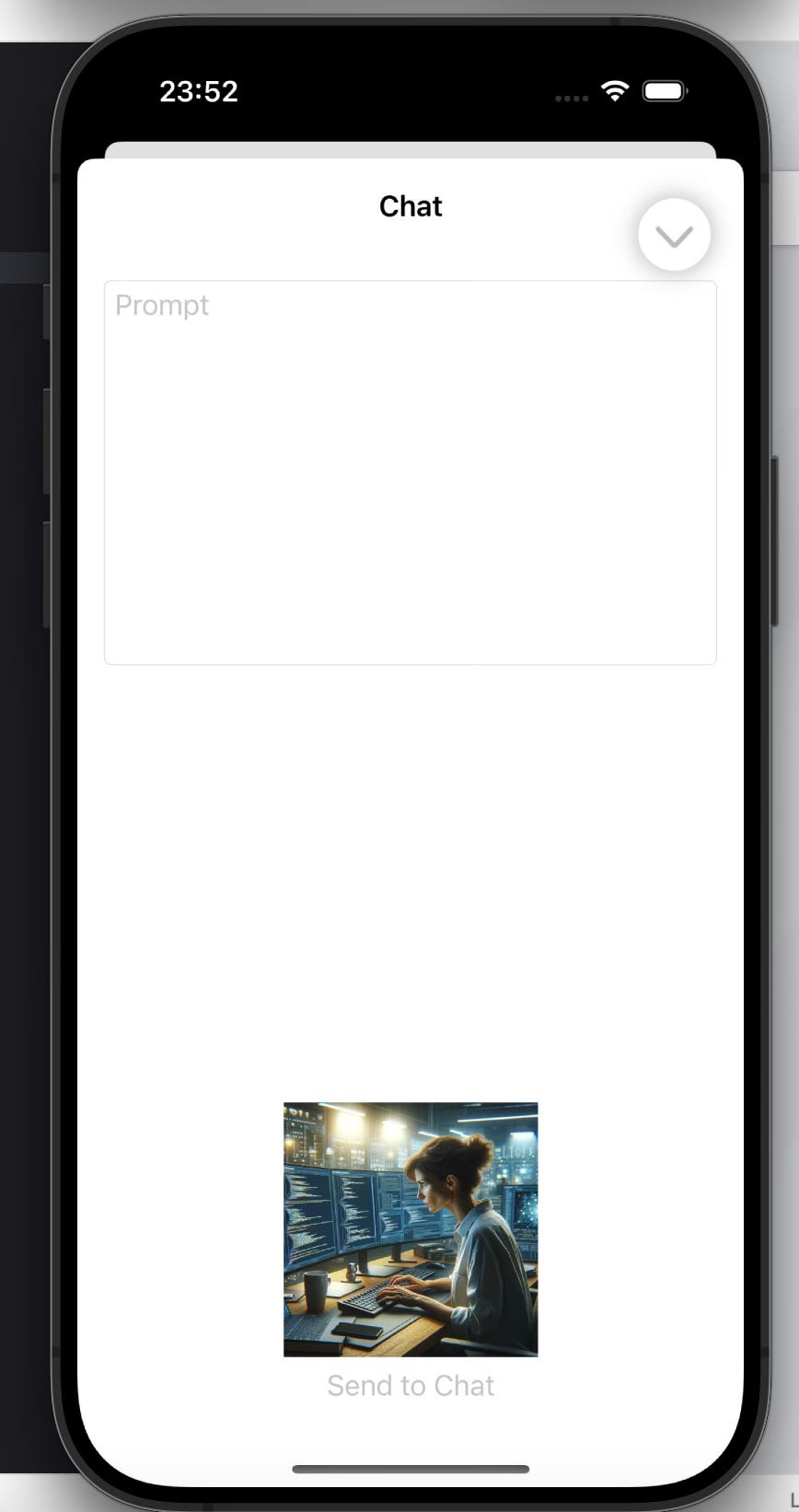

Here is what the Chat screen looks like:

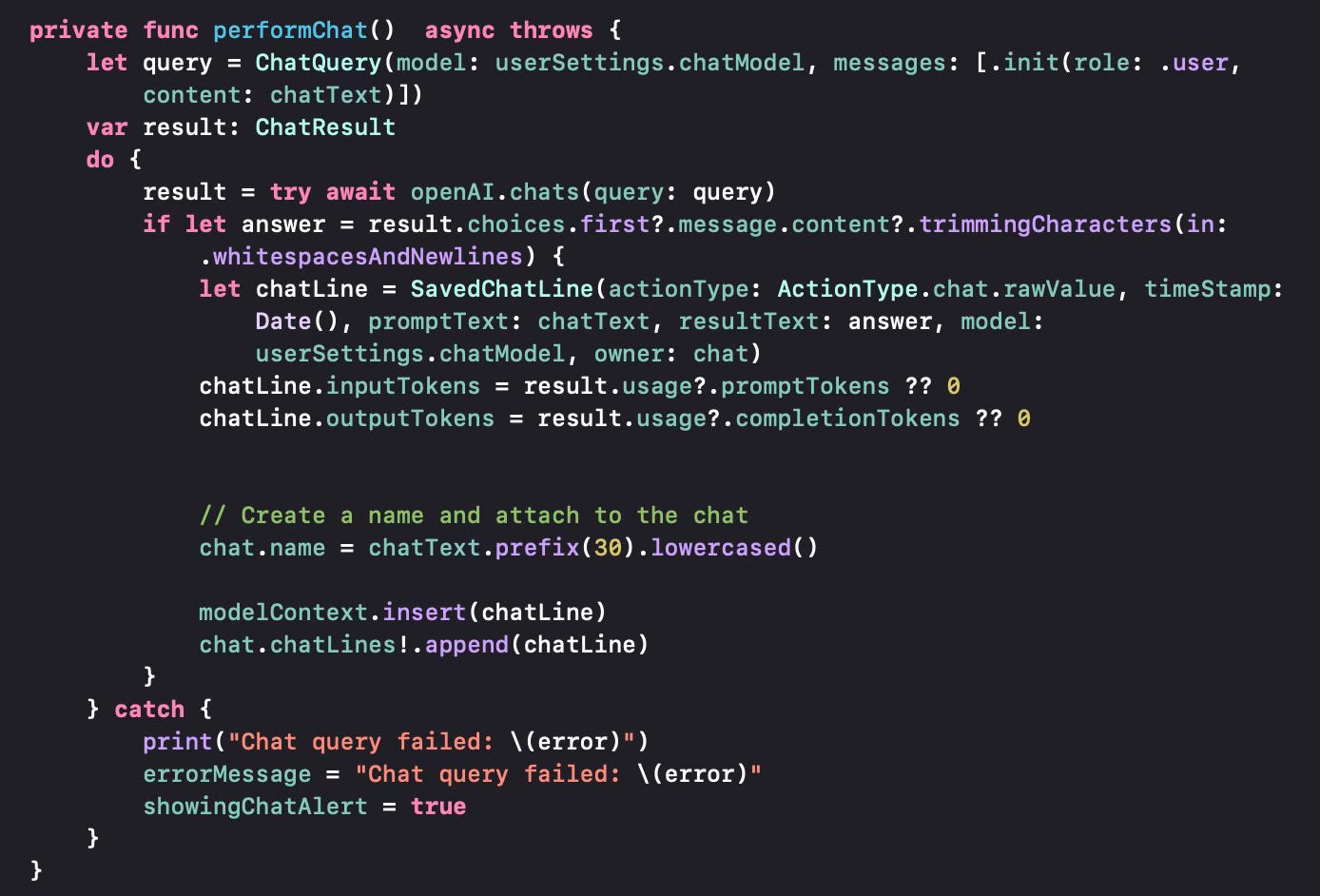

When we type a prompt and send the text to chat, we use a revised version of the performChat function. It uses the chatModel parameter from the user settings.

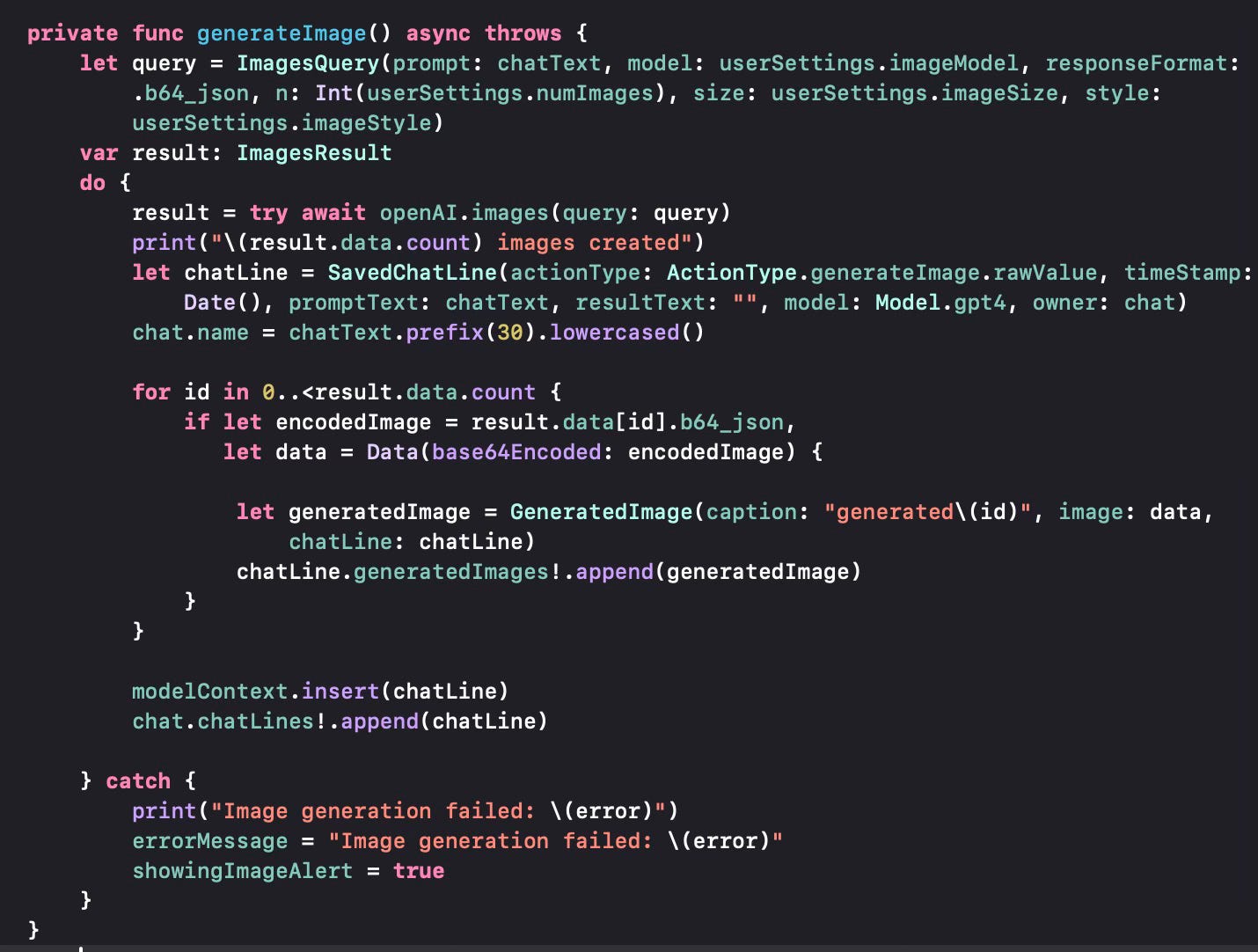

For image generation we have more settings to use.

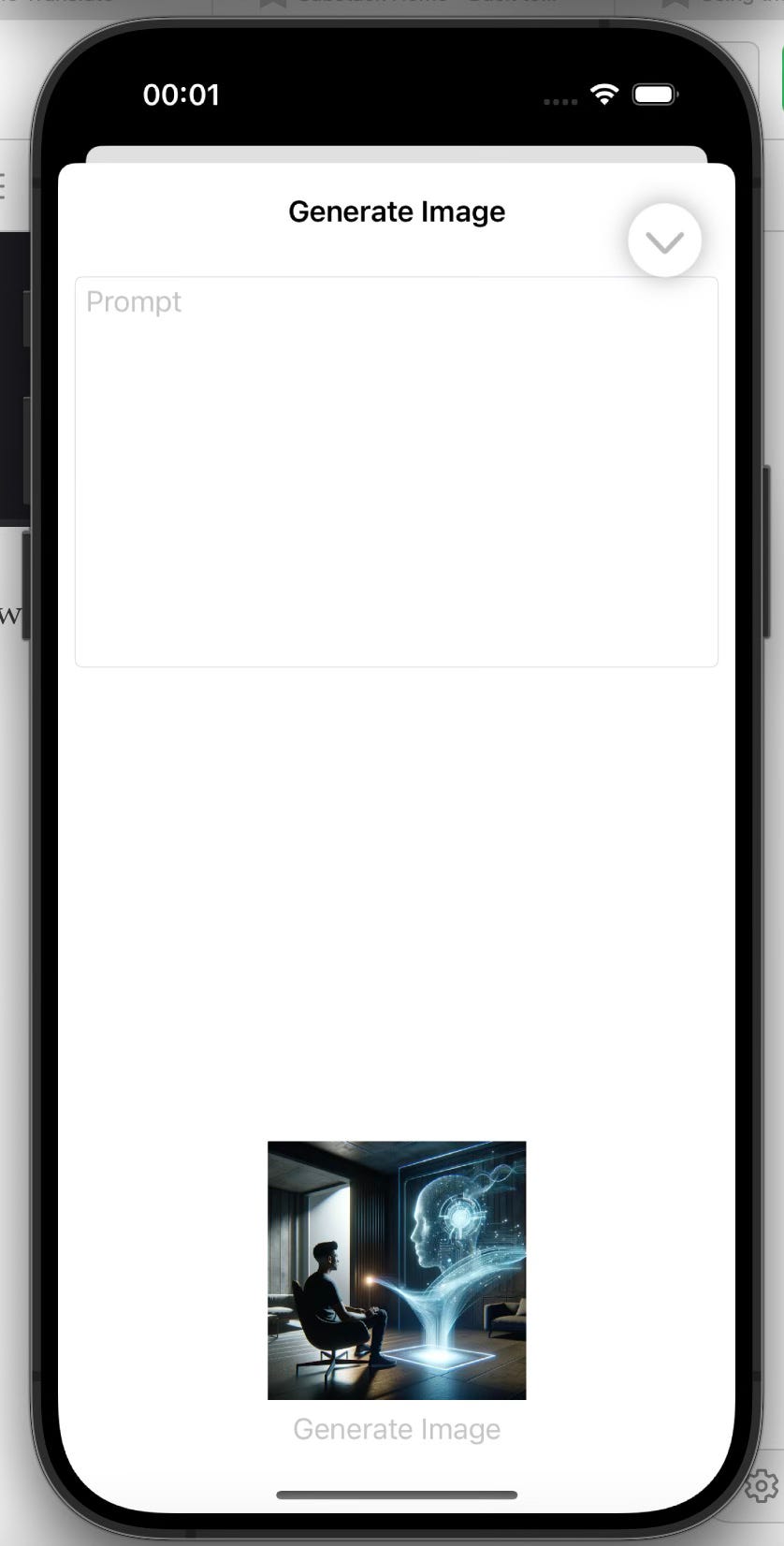

We use the imageModel, numImages, imageSize, imageStyle parameters when sending the query. We use the following screen to provide a prompt and to get an image back.

In subsequent posts, I will show how to save the generated images to your iCloud Drive and will also cover other actions such as Generate Edited Image, Speech Transcription and so on.