Early Impressions on Apple Intelligence Beta

Testing the new Apple Intelligence updates in MacOS 15.1

Apple Intelligence - MacOS 15.1 Developer Beta

I have finally managed to update my developer computer to MacOS 15.1, thus enabling the use of the Apple Intelligence Developer Beta.

This trial started with my realization that it is possible to use Apple Intelligence on compatible machines i.e. any Mac with an Apple Silicon chip. So I opened the Settings for my MacBook Pro with an M2 Max chip and set the region to U.S. and the language to U.S. English.

Going to my software updates, I selected the macOS Sequoia 15.1 Developer Beta and installed the update (works only if you are logged in with an Apple ID that has been registered as a Developer ID).

The install was straightforward and added an Apple Intelligence and Siri option, where I requested access to Apple Intelligence by going on a waitlist. Previous articles I read suggested a few minutes waiting time but this was not to happen. I got a message about 20 hours later and could turn on Apple Intelligence.

I do not have a compatible iPhone or iPad, thus I could not test this feature on these devices, where they could possibly be more useful with day-to-day use as compared to their use on a Mac computer.

The Model

Apple published a paper about the on-device model that they are using for Apple Intelligence. Here are some facts we discover from the paper:

The model has approximately 3 billion parameters. This technically makes it a Small Language Model, similar to Microsoft’s 3.8 billion parameter Phi-3 mini or Google’s Gemini Nano (two versions with 1.8 billion and 3.25 billion parameters exist). These models are specialized for running on-device, not needing Internet access and as such they are much more secure than LLMs that can only be reached through the Internet (unless you build your own dedicated server with an open-source model). The model uses the Transformer architecture (as most LLMs now use) and they are decoder-only (thus training has been done and weights determined and stored on-device).

Pre-training has been conducted with licensed materials and Apple’s own web-crawlers (thus questions about rights for data used for training are not completely resolved).

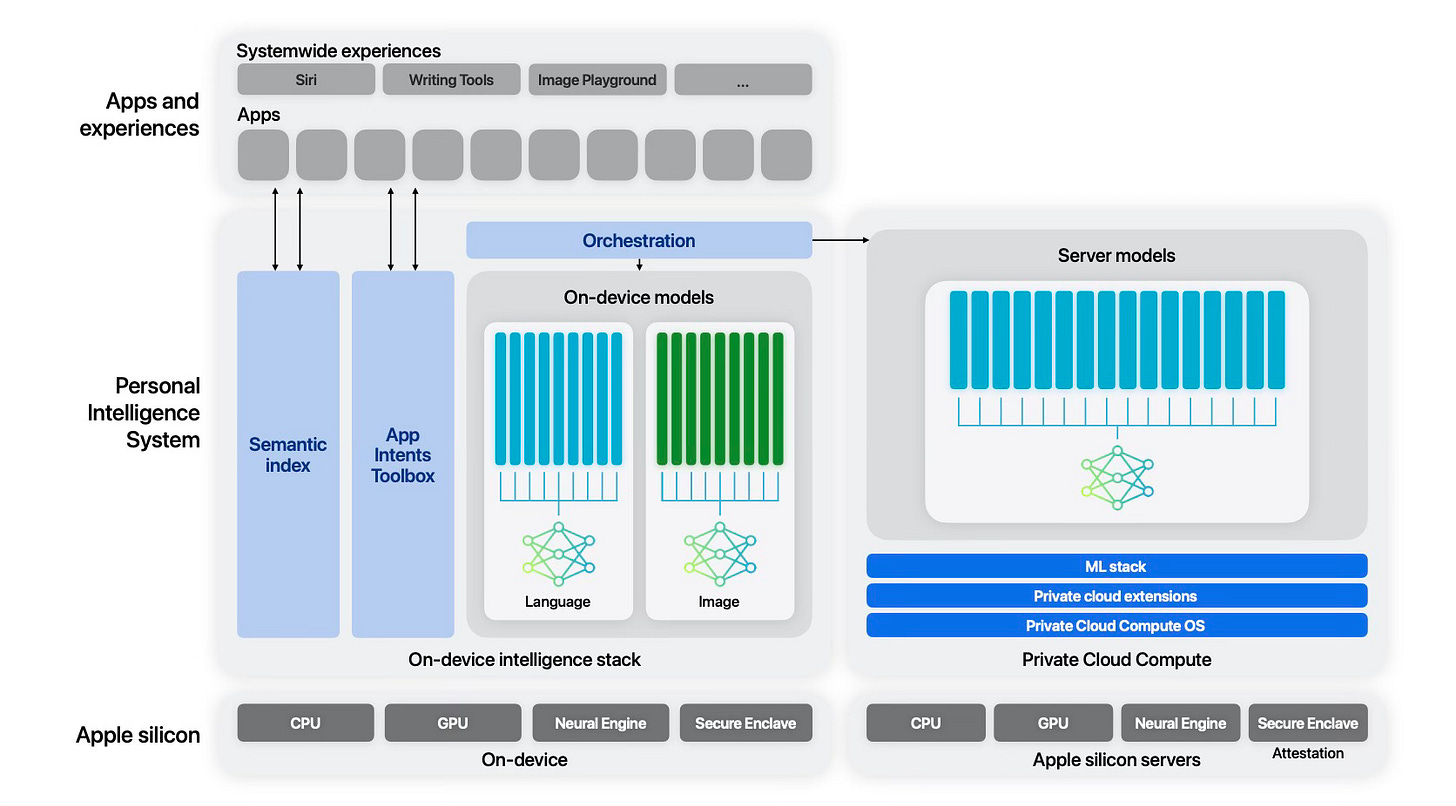

Apple has included a diagram showing the architecture of their on-device and server-based models (on the Apple Private Cloud).

As we can glimpse from this architectural diagram, Apple has heavily optimized the model to map the functionality to existing Apple services on the devices, so as to make practical and pragmatic use of AI features.

Writing Tools

My first attempt was to use Writing tools. I used it within Substack for the previous paragraph, which was a short description of how I gained access to Apple Intelligence. The standard right mouse-activated context menu got a new submenu named Writing Tools, which has options like Summarize, Rewrite, Make Friendly, Make Professional or Make Concise and so on. Here is the result of a Make Professional action for the previous paragraph:

The installation process was straightforward, and it added an Apple Intelligence and Siri option. I requested access to Apple Intelligence by joining a waitlist. Previous articles I read indicated a potential waiting time of a few minutes, but this was not the case. Approximately 20 hours later, I received a message informing me that I could activate Apple Intelligence.

Siri

Siri has different visual cues now with a glow at the edge of the screen. It is now possible to ask Siri by typing instead of speaking. I am not a heavy Siri user but I will certainly test this later, when I’m ablşe to train Siri on my triggering responses. For some reason I could not get past the initial training Siri requires to be able to activate a trigger statement.

Mail

Once you read a mail (scroll to the end), Mail displays a Summarize button that attempts to summarize the email and presents it to you. I used it on an incoming message from Digital Theatre announcing a new recorded play and got the summary below:

I tried it on an email coming from MIT confirming my membership to their Open Learning program.

It is still early beta period but I felt the summaries were a bit terse and could have included more useful information in some cases, but it is a good beginning.

Photos

Photos benefits from on-device AI features immediately, but I suspect this is only the beginning. The obvious feature is the Memories section where you could previously search for photos that satisfied a criterion for metadata already associated with the photos, namely the People in the photos (which you could initially register and Photos would also assign names to faces in the background before Apple Intelligence was even designed), locations and so on. Now this extends to free text search using the on-device language model. Here is an example that I tried first. I asked Photos to find all photos of me where I was wearing a red shirt.

The result is impressive. I tried different variations of this search and all seems to work well.

Safari

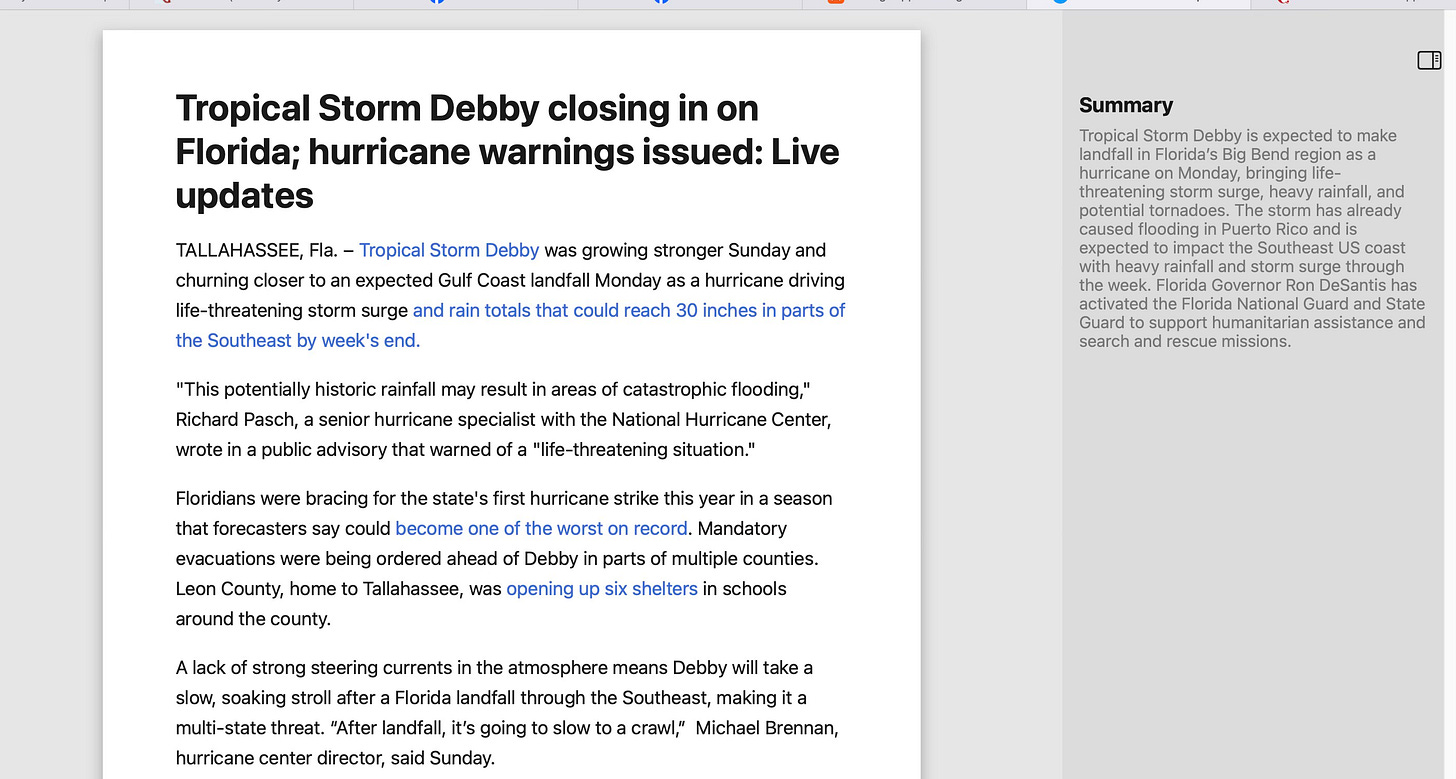

When in Reader mode, a Summarize button can summarize the article being read.

Apple Intelligence Report

This is an interesting report that can be obtained from a new section under Settings/Privacy and Security and provides what the model has done for you in a time period (currently the two options provided are for 15 minutes and 7 days, I suppose this will be changed in the future). The result is a JSON file that shows in detail what Apple Intelligence has done for you.

Conclusion

This post is prepared on the basis of a few days’ use of the first Developer Beta of Apple Intelligence and as such should not be indicative of the final functionality of this capability. I will be looking forward to Apple providing more of the features promised at the WWDC 2024 and might write future posts about them.