Image Playground API

Apple has released new developer betas (Beta 2) for iOS 18.2 and MacOS 15.2 last week. After the lukewarm reception of iOS 18.1 and MacOS 15.1, due to the fact that there are only a few Apple Intelligence features, the next batch of releases delivered some neat AI stuff. I covered these in two posts the last two weeks.

One of these neat AI features is the release of the Image Playground application. This is basically an image generator built on top of an on-device model (a diffusion model). The model can only generate animation and illustration styles for the time being, but my expectation is that this will change in the future.

I wanted to find out what we can do with the image generation model through the use of the Apple APIs.

It looks like Apple has released a new Image Playground API with this new beta. Since I am a SwiftUI developer, I am familiar with how to use this in SwiftUI code, but I’m sure the same can be done with UIKit-based (or AppKit-based) code as well.

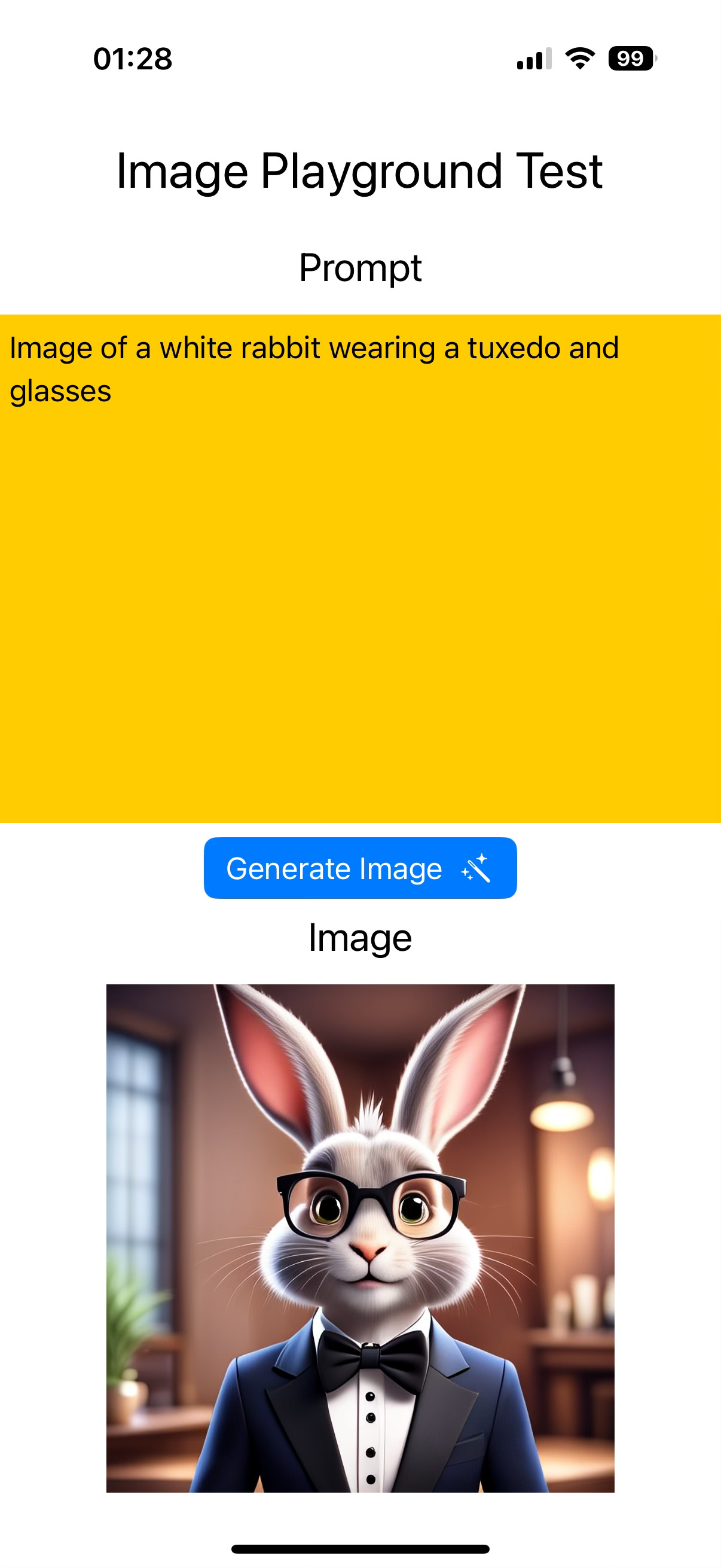

What I had in mind was a simple one-screen application that lets the user provide textual prompts and generate images with the on-device model that Image Playground uses.

When Image Playground was first launched, I had to put my name on a waiting list and until that request was approved, Image Playground just told me I was on a waiting list and gracefully exited. Once I was approved, I was able to use Image Playground.

Apple has done a good job in providing this API (ImagePlayground) to the developers. I also realised during this coding session that the whole Image Playground functionality was basically captured in a simple API and the Image Playground application we saw consisted of a single call to the API. We’ll look into this in a minute.

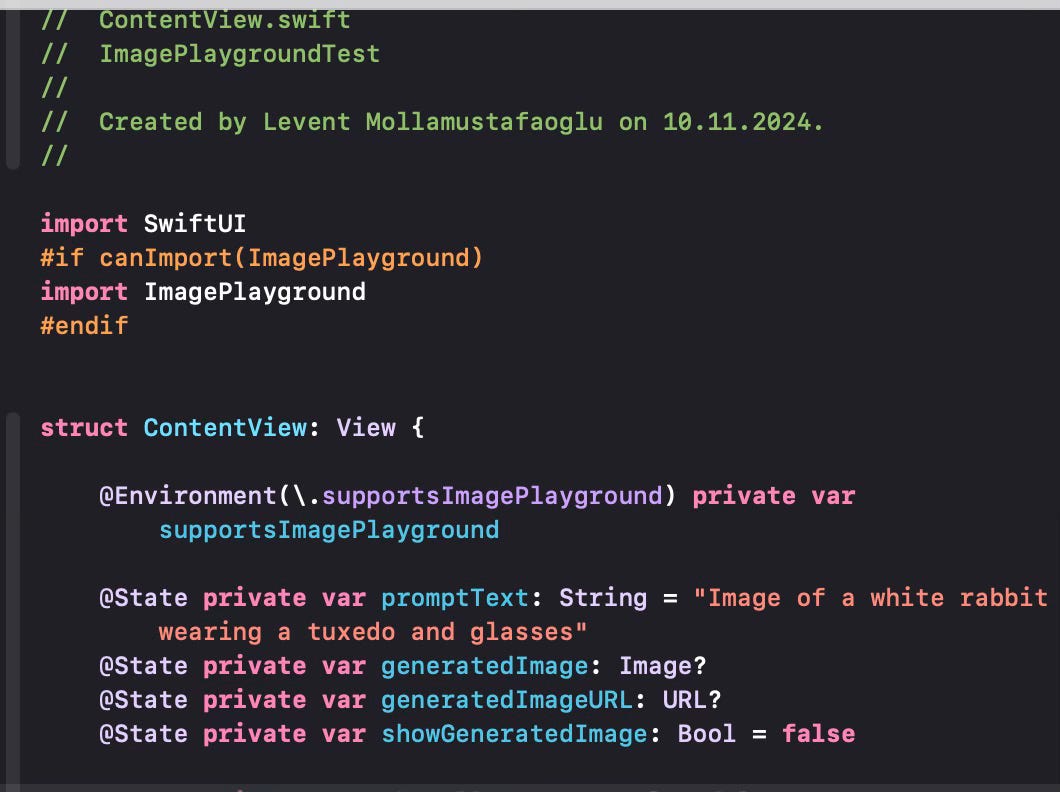

I started by creating a SwiftUI application. I did not want any storage at this point, so any generated images will not be saved.

One thing we must first do is to check whether ImagePlayground is supported or not. As you will recall, Apple Intelligence features are supported on iPhone 15 or above, MacOS with an Apple Silicon chip and on iPads with Apple Silicon chips. This is the first availability check. ImagePlayground is available on iOS 18.2 or MacOS 15.2 beta versions. This is the second availability check. If your device and OS to run this application fits the description, then the environment variable shown here (supportImagePlayground) will be set properly and you will be able to use the API.

I defined a prompt variable (promptText) that will store the user prompt and gave it a default value. I also defined two optional variables to store the generated image and the URL where this generated image is stored by the API. showGeneratedImage controls when the ImagePlayground interface is going to be activated.

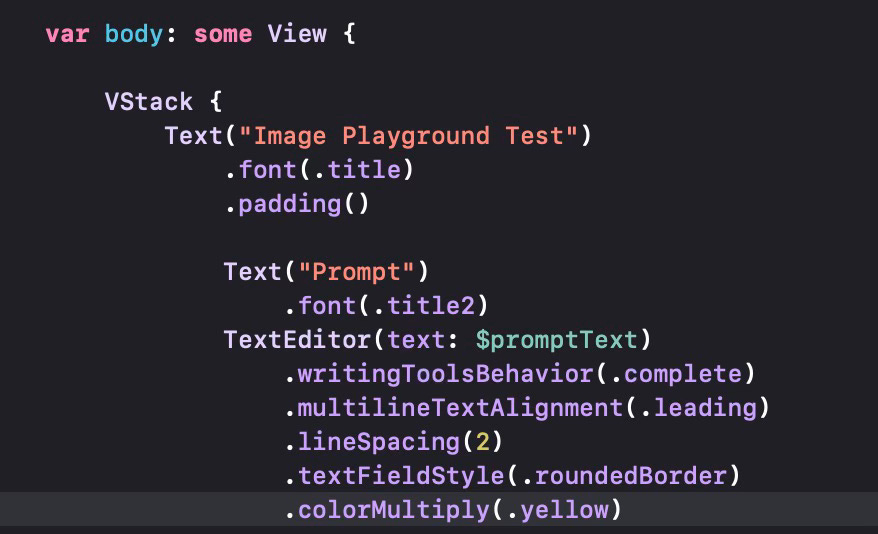

I wanted to define a very simple interface which consisted of a VStack storing all the elements on screen. I put a title declaring that this is the Image Playground Test application. Then I put in a label for the prompt and the input field for the prompt with a TextEditor structure enabling the use of long, multi-line prompts. I also appended the TextEditor with a .writingToolsBehaviour modifier to enable the use of Writing Tools, as it is a good idea for prompts. This would even give us ChatGPT support, enabling much more complex prompts to generate the image.

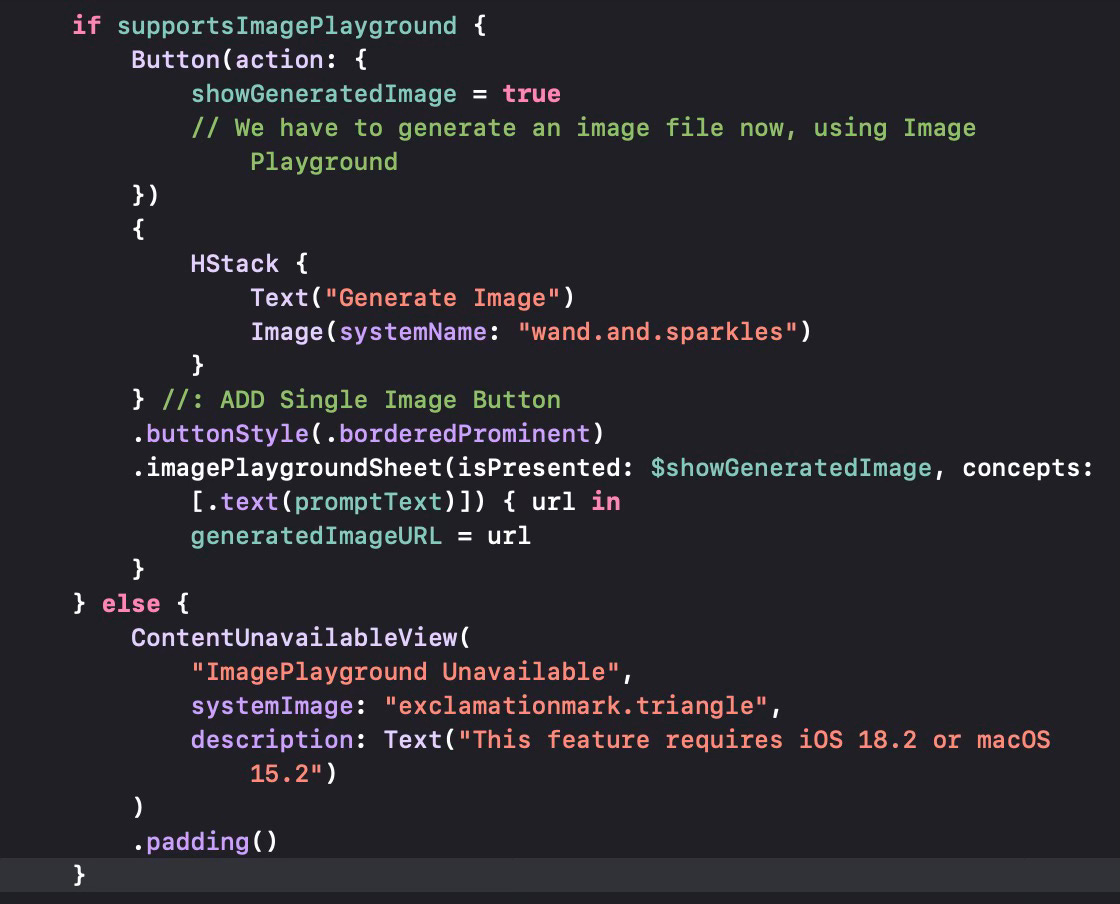

Now we have to add the other interface elements. If the device/OS supports Image Playground then we define a button that will be used to generate the image using the API. We add the modifier to the button with two simple parameters. The first one is a Boolean which determines whether we should activate the ImagePlayground generation sheet (set to true whenever the user presses the button) and the second one passing some parameters already (in this case we pass the default text prompt).

If the device/OS does not support ImagePlayground, then we display a ContentUnavailableView to tell the user that ImagePlayground is not supported. If the sheet produces an actual image, then we set generatedImageURL to url which is returned by the sheet.

One issue I had was that I could not make this work on an iOS simulator. Although the simulator I used was an iOS 18.2 simulator with Apple Intelligence enabled, it did not have Image Playground and I did not see a way to force the simulator to download it (most likely because we do not go through the waiting list etc. for the feature to be enabled). In this case the application, as expected and programmed, displayed a warning that the feature was not available.

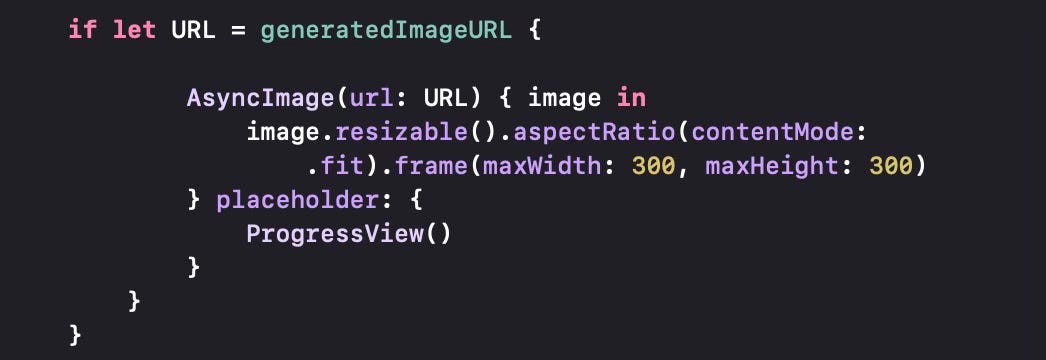

This next section covers the display of the generated image. We get the image from the URL and display it on screen.

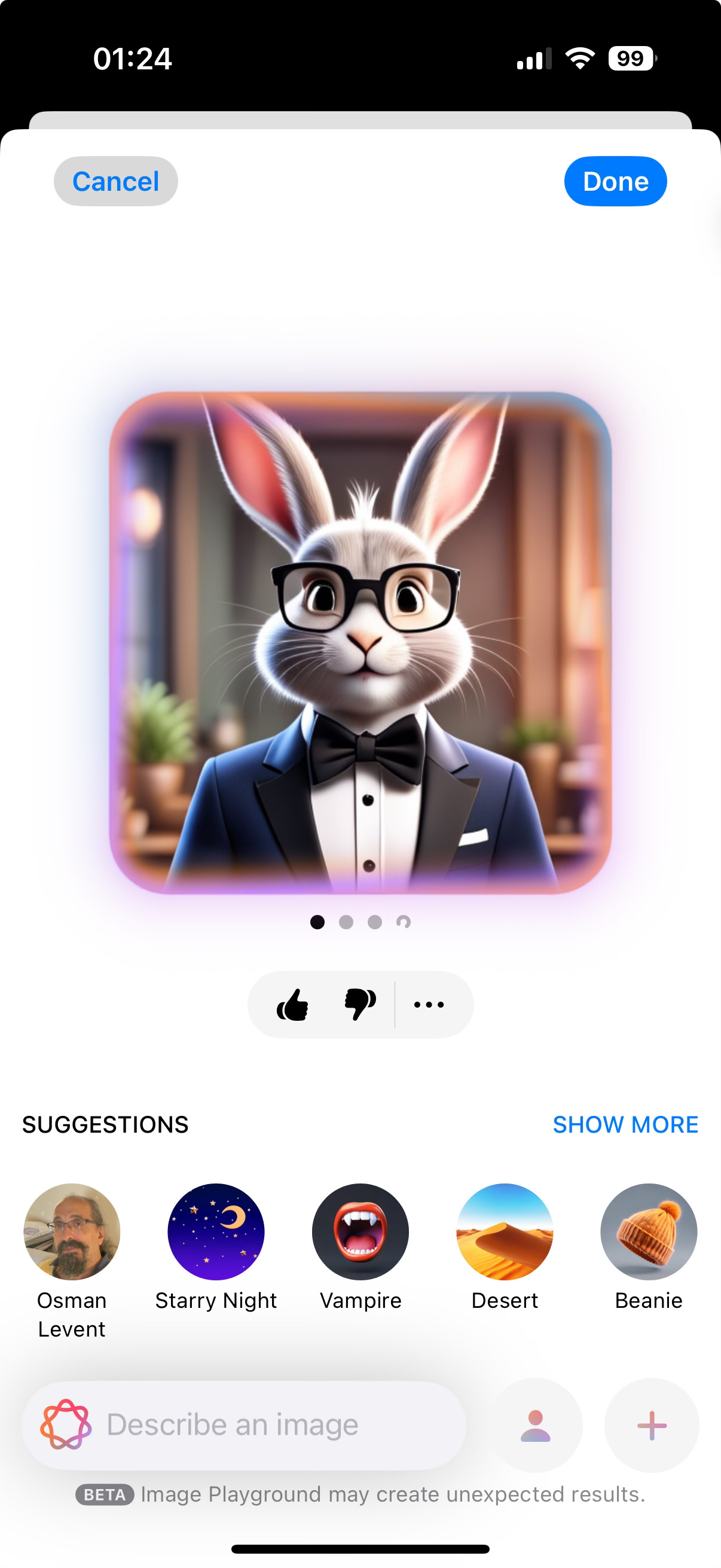

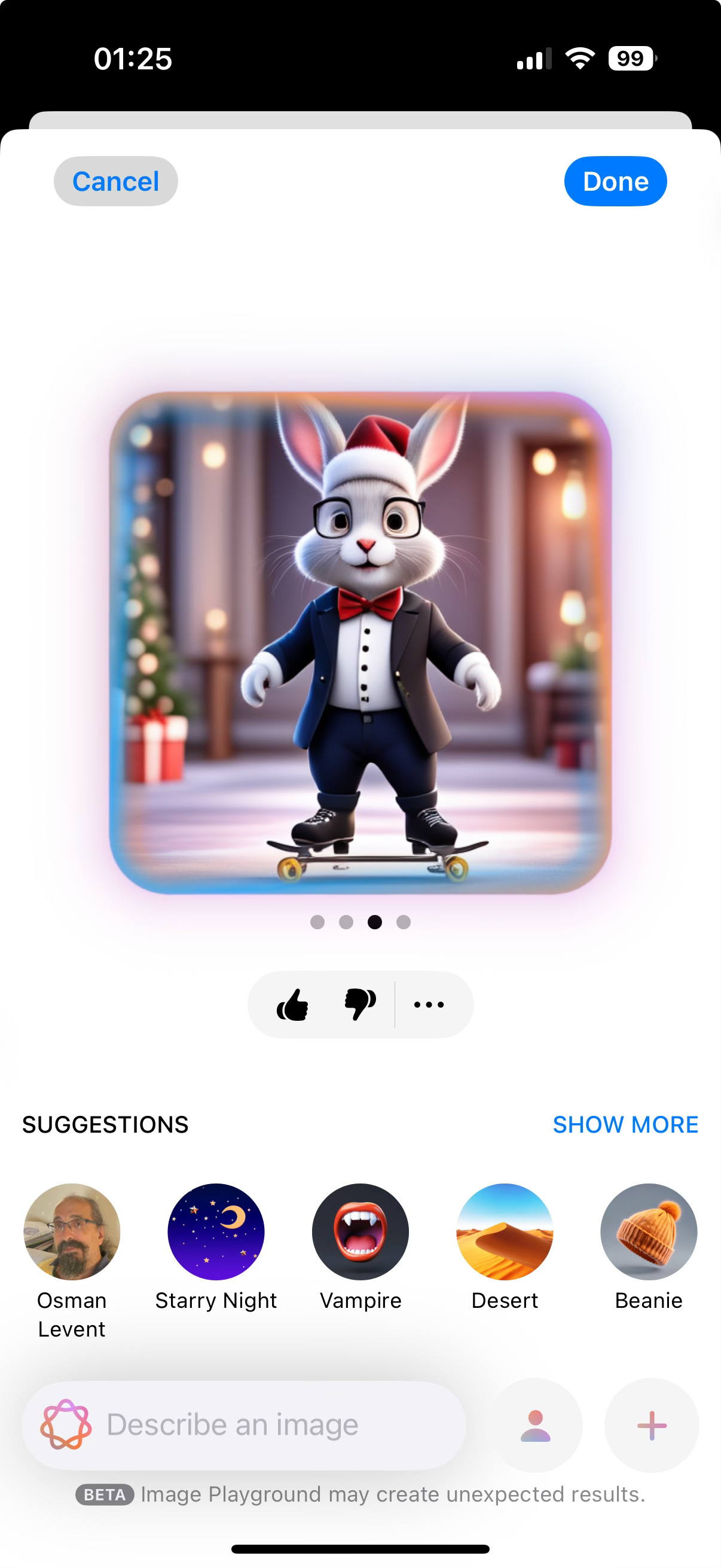

The activated sheet basically has the full ImagePlayground application interface. We can update the generated image by providing additional prompts (in this example I added skates and Santa clothes).

Once an image has been selected, it is returned from the API and can now be displayed on the application screen.

I also noticed that any image generated through the API and returned to the main application gets stored, so that the Image Playground application itself will also have the images. Thus the API not only gives you the functionality, but the storage (through iCloud) for the generated images.

Exporting the Image

Now we attempt to export the image generated through the API. It is already on screen, displayed by the ASyncImage call.

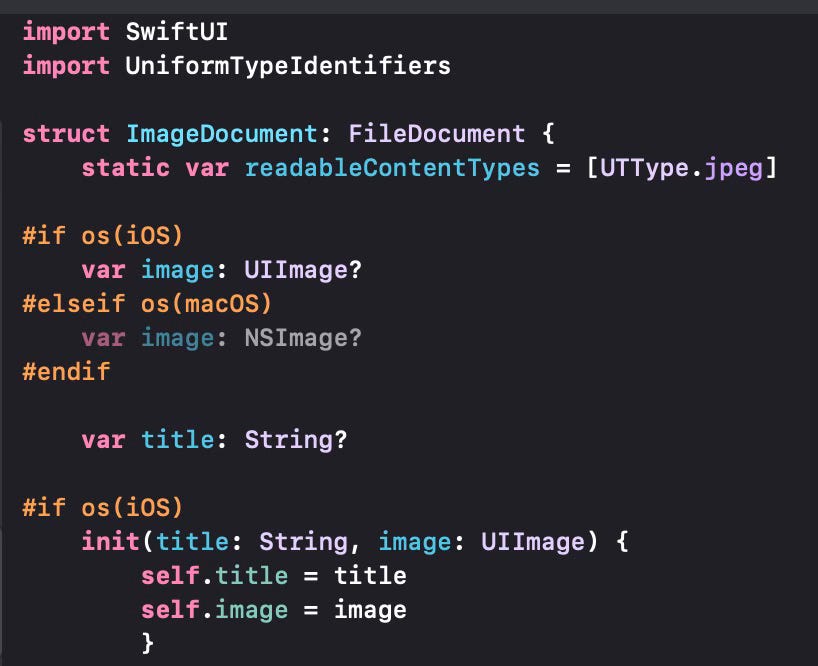

An easy way to export images (or other documents) to the iCloud Drive is to use the FileDocument structure available in SwiftUI. I need a subclass of FileDocument that can handle images.

ImageDocument handles jpeg images. You see some code variant on whether we run iOS or MacOS.

The only thing we have to implement is the initialiser which basically prepares the data and puts it into the required variables inside the document structure and a fileWrapper function which actually writes the image into the file (in jpeg format, in this case). We define an array of these structures, although we will export only one image at this time.

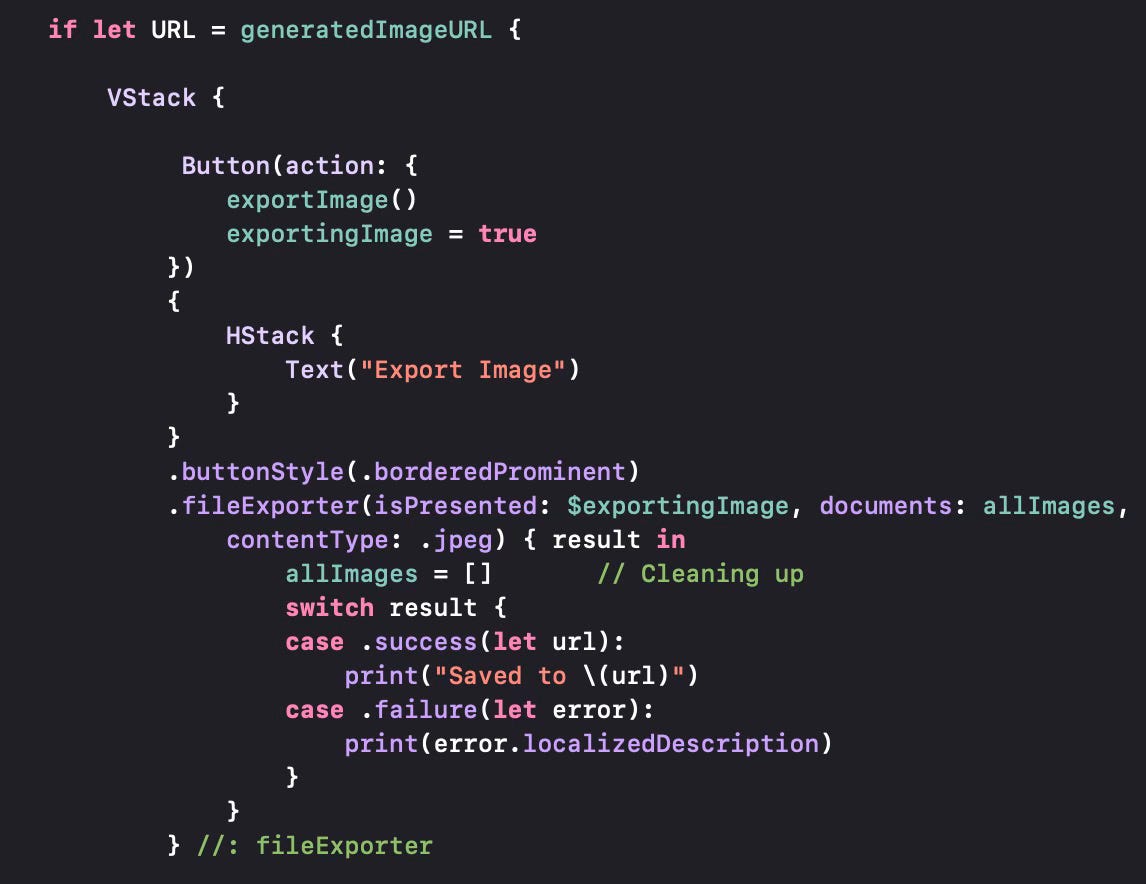

Using this structure, we will add the export button and the functionality to the main code. We group this export button with the AsyncImage previously implemented, in a VStack.

If there is a valid URL, then we display a button to export the image. When the user presses the button, we invoke exportImage (shown below) and use the .fileExporter modifier that will export all ImageDocument structures (prepared by exportImage, but in our case there is a single image).

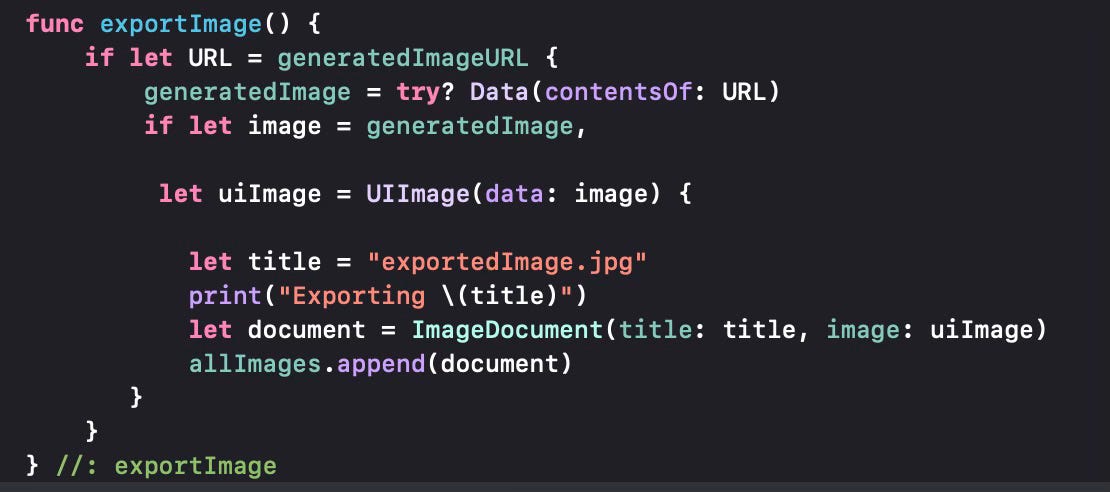

The export is done by using the url once again to get the contents and convert to a generic Data structure, then converting to a UIImage and adding it to the ImageDocument array we have declared earlier. (We would need something similar using an NSImage under MacOS).

I believe this API will be improved in future releases, along with the improvements in the Image Playground application itself.